How to Detect Data Drift in AI Annotation in ML Models

The basic premise behind every machine learning algorithm or model is that the data used to train it represents real-world data.

The basic premise behind every machine learning algorithm or model is that the data used to train it represents real-world data.When you train a model in supervised learning, we usually do data labeling and annotation, but there is no actual label when you deploy the model in production. No matter how accurate your model is, the predictions will only be correct if the data uploaded to the model in production mimics the data used in training. What if it doesn't work out? It's referred to as "data drift."

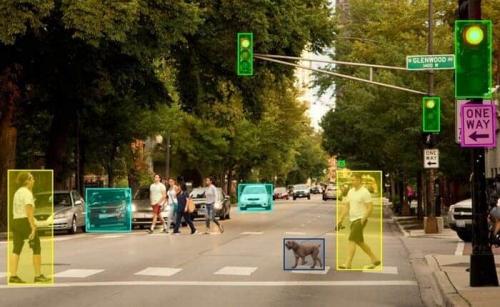

Data drift is a phenomenon that can occur when the data changes over time. This can cause the model to produce inaccurate results. In computer vision, this can happen when the image annotation or video labeling used to train the model or machine learning annotation are different from the images or videos used to test the model.

Types of Data Drift

1. Concept Drift

The statistical features of the target variable, which the model is attempting to forecast, vary with time, referred to as concept drift. This presents issues since forecasts become less accurate and untrustworthy as a result.

2. Drift in Covariate

The change in the distribution of one or more of the dataset's independent variables or input variables is known as covariate shift. This suggests that the feature's distribution has altered while the connection between the component and the target variable is the same. The same model created earlier will not offer unbiased results if the statistical features of the input data change. As a result, projections and AI annotation are wrong.

How to detect data drift in your machine learning project?

There are several ways to detect data drift in your computer vision machine learning (CVML) project; some of them are explained below:

1. Compare the training dataset to the validation dataset.

This can be done by visually inspecting the images, video labeling or looking at statistics such as mean, standard deviation, and histogram. If there is a notable difference between them, then this could indicate that your training data, i.e., data labeling and annotation, has drifted over time.

2. Train and evaluate different datasets

This can be done randomly by splitting the machine learning annotation (dataset) into two parts, training on one part, and evaluating. You can then repeat this process multiple times until you have trained and considered all possible combinations of datasets in AI annotation.

If there is a considerable distinction between them, then this could indicate that your training data has drifted.

3. Compare model results with human evaluation.

This can be done by asking humans to evaluate the model performance on a validation dataset. If there is a significant difference between the two, this could imply that your training data has drifted over time.

Additionally, you can also ask humans how they would classify an image if given multiple choices (e.g., cat or dog) and then compare these results to what the model predicts.

4. Compare different algorithms

This can be done by comparing how different algorithms perform on the same validation dataset (machine learning annotation). If there is a significant disparity between them, this could signify that your training data has drifted over time or vice versa (the validation dataset has drifted).

There are many ways to detect data drift in your computer vision machine learning project, and the approach you choose will depend on the specific situation.

However, by following the methods described above, you can increase the likelihood of detecting and preventing data drift before it becomes a problem in your machine learning project.

Conclusion

Drift detection is an essential phase in the AI annotation and machine learning lifecycle, and it should not be overlooked. It should be automated, and significant consideration should be given to the drift techniques, thresholds to apply, and actions to be done when drift is discovered.

Comments