Using Generative AI for Effective Content Moderation

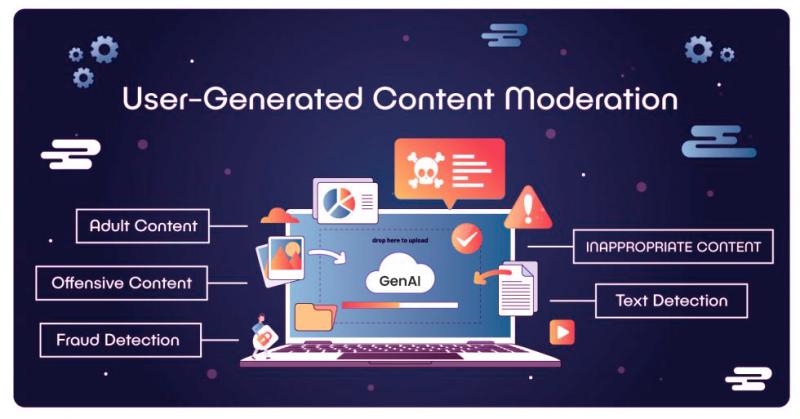

Generative AI, used in content moderation, especially for social media platforms, is trained to recognize and interpret the global languages and expressions that users speak or write in.

UGC is a big part of the internet, and brands use it in their content marketing strategy to build recognition and trust. It comprises social media posts and comments, video content, photographs, selfies, gaming content, and online reviews.

As the volume of online content increases, it becomes overwhelming for human moderators to efficiently manage and review all content. Nowadays, businesses look for content moderation services to ensure their digital spaces remain welcoming, safe, free from negativity, and compliant with platform policies.

In this post, we will analyze how generative AI can transform content moderation for digital platforms that violate the organization’s policies.

Need for Content Moderation Services

Moderating user-generated content offers great insight for pattern recognition as the volume of UGC has risen, leading to opportunities and challenges. Let us understand the correlation between moderation services and businesses looking for the same:

Increase in User Engagement → Rise of UGC

The use of social media platforms such as Reddit, Quora, Facebook, Instagram, TikTok, Threads, and YouTube means more users are creating and sharing content online. This digitization has empowered users to a new era of expression and to form opinions, share experiences, and participate in online conversations. Businesses, influencers, and regular users are generating significant amounts of content in real-time.

UGC Attracts Diverse Audiences → Risk of Harmful Content

UGC brings diverse perspectives, and it can be good or bad. Some users may intentionally post such content, leading to misinformation, hate speech, harassment, or spam. Even well-meaning users, like celebrities and influencers, sometimes contribute to inaccurate information that becomes viral.

Volume and Variety of UGC → Need for Content Moderation

The sheer volume and variety of UGC mean that businesses must have effective content moderation in place. With moderation, users are protected against false information, monetary loss, or data breaches caused by content containing spam links, etc.

Moderation Protects Brand and Community → Balance Between Engagement and Safety

The digitization and rise of social media have shifted the focus on business to be socially and ethically responsible. Moderators, either through automated tools or human review, ensure that UGC complies with platform rules and societal norms. Content moderation solutions now apply to most industries and sectors to protect their brand reputation and ensure that users feel safe and respected.

As UGC Grows, Moderation Technology Evolves

More UGC means more content scrutiny, which means the content moderation method must be outstanding. Manual moderation alone is insufficient to handle the massive scale of UGC. Therefore, AI-driven moderation tools have become increasingly important for detecting inappropriate or harmful content in real-time.

Content Moderation filters, monitors, and validates publicly available content to ensure company credibility and professionalism. Today, third parties often provide these services to companies.

The Benefits of Generative AI for Content Moderation are:

Gen AI detects and screens content far faster than any human moderators, ensuring social media platforms remain safe and free from negativity even as the volume of UGC grows.

Some notable benefits are as follows:

It can handle routine tasks, such as filtering out spam, detecting explicit tone and language, and flagging inappropriate images.

It can make consistent decisions based on patterns, context, and language nuances, minimizing personal biases.

It can offer near-instantaneous responses by processing and analyzing vast amounts of real-time data that would be impossible for human moderators to achieve.

It can identify and mitigate harmful content trends, such as misinformation, harassment, hate speech, discrimination, or newer ones like cyberbullying and deepfake videos.

It can prevent the spread of misleading and hurtful user-generated content before it spreads to a wider audience, making digital platforms much safer for everyone.

It can improve the Area Under Curve (AUC), for social media platforms. This means that AI is getting better at distinguishing between harmful and benign content, reducing false positives (unnecessary removals) and false negatives (allowing harmful content).

Traditional rule-based algorithms for content moderation need to catch up with the complexity of digital content. But, Gen AI is more intelligent and context-aware in addressing challenges like misinformation, harmful speech, and flagging inappropriate content on-point.

Gen AI-driven moderation allows for the rapid processing of large training data, significantly speeding up moderation pipelines. Furthermore, it helps businesses respond in real time to problematic content, reducing the risk of harm or reputational damage due to delayed action.

Where do Data Labeling Companies Fit for Content Moderation?

Moderation seems easy but for effective content moderation, the need for a data labeling company is a must.

For example, to address multiple languages and cultural contexts, your platform needs not only AI tools and training data but also highly skilled multilingual labelers who can moderate content in diverse languages like English, Spanish, French, and more. Do you think your internal team will suffice? Not necessarily!

This means that content service providers are important for moderation and curation. They ensure platforms are moderated consistently across global regions, considering cultural and linguistic variations.

Content moderation services via third-party offer:

Professional Labelers for Various Domains

Handling digital content often requires making nuanced decisions regarding hate speech, misinformation, defamatory remarks, and statements that are sensitive to societal norms. Here, the data annotation partner enables professional labeling of AI models to operate with accuracy, all while understanding the subtleties of content.

Facilitating Multilingual Moderation

Labeled data needs to represent a variety of languages, entity recognition, and text classification because platforms are global in scope. To ensure that AI models are trained to spot or highlight unsafe information across many linguistic and cultural contexts, data labeling firms employ domain experts knowledgeable in local languages.

Increasing Data Pipeline Speed

It makes sense to save time and money if data labeling firms could expedite the content moderation process by 10X. Faster data pipelines enable faster updates to AI models, preserving the effectiveness of content filtering in an ever-changing online environment.

What’s next?

To sum up, we now know how important generative is for content moderation. It makes content services faster, more accurate, and more consistent. By partnering with an experienced content moderation partner, businesses can ensure best practices in creating much more reliable and safer digital platforms.

Choosing the right content services for integrating generative AI into content moderation is crucial. They help you adapt to changing regulations, conform to a pre-determined set of guidelines for moderation efforts, and ensure a secure, inclusive online environment.

Comments