Should We Fear AI?

Choosing Sides

If tech experts are to be believed, artificial intelligence (AI) has the potential to transform the world. But those same experts don’t agree on what kind of effect that transformation will have on the average person. Some believe that humans will be much better off in the hands of advanced AI systems, while others think it will lead to our inevitable downfall.

How could a single technology evoke such vastly different responses from people within the tech community?

Artificial intelligence is software built to learn or problem solve — processes typically performed in the human brain. Digital assistants like Amazon’s Alexa and Apple’s Siri, along with Tesla’s Autopilot, are all powered by AI. Some forms of AI can even create visual art or write songs.

There’s little question that AI has the potential to be revolutionary. Automation could transform the way we work by replacing humans with machines and software. Further developments in the area of self-driving cars are poised to make driving a thing of the past. Artificially intelligent shopping assistants could even change the way we shop. Humans have always controlled these aspects of our lives, so it makes sense to be a bit wary of letting an artificial system take over.

The Lay Of The Land

AI is fast becoming a major economic force. According to a paper from the McKinsey Global Institute Study reported by Forbes, in 2016 alone, between $8 billion and $12 billion was invested in the development of AI worldwide. A report from analysts with Goldstein Research predicts that, by 2023, AI will be a $14 billion industry.

KR Sanjiv, chief technology officer at Wipro, believes that companies in fields as disparate as health care and finance are investing so much in AI so quickly because they fear being left behind. “So as with all things strange and new, the prevailing wisdom is that the risk of being left behind is far greater and far grimmer than the benefits of playing it safe,” he wrote in an op-ed published in Techcrunch last year.

Games provide a useful window into the increasing sophistication of AI. Case in point, developers such as Google’s DeepMind and Elon Musk’s OpenAI have been using games to teach AI systems how to learn. So far, these systems have bested the world’s greatest players of the ancient strategy game Go, and even more complex games like Super Smash Bros and DOTA 2.

On the surface, these victories may sound incremental and minor — AI that can play Go can’t navigate a self-driving car, after all. But on a deeper level, these developments are indicative of the more sophisticated AI systems of the future. Through these games, AI becomes capable of complex decision-making that could one day translate into real-world tasks. Software that can play infinitely complex games like Starcraft, could, with a lot more research and development, autonomously perform surgeries or process multi-step voice commands.

When this happens, AI will become incredibly sophisticated. And this is where the worrying starts.

AI Anxiety

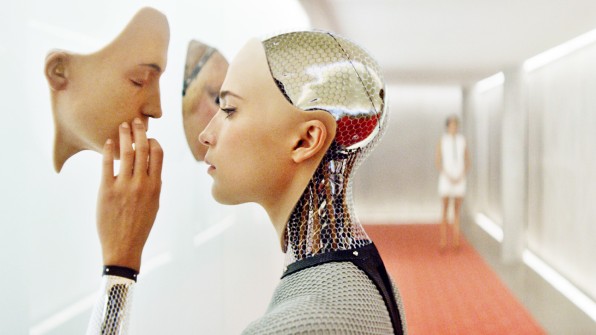

Wariness surrounding powerful technological advances is not novel. Various science fiction stories, from The Matrix to I, Robot, have exploited viewers’ anxiety around AI. Many such plots centre around a concept called “the Singularity,” the moment in which AIs become more intelligent than their human creators. The scenarios differ, but they often end with the total eradication of the human race, or with machine overlords subjugating people.

Several world-renowned sciences and tech experts have been vocal about their fears of AI. Theoretical physicist Stephen Hawking famously worries that advanced AI will take over the world and end the human race. If robots become smarter than humans, his logic goes, the machines would be able to create unimaginable weapons and manipulate human leaders with ease. “It would take off on its own, and redesign itself at an ever-increasing rate,” he told the BBC in 2014. “Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

Elon Musk, the futurist CEO of ventures such as Tesla and SpaceX, echoes those sentiments, calling AI “…a fundamental risk to the existence of human civilization,” at the 2017 National Governors Association Summer Meeting.

Neither Musk nor Hawking believe that developers should avoid the development of AI, but they agree that government regulation should ensure the tech does not go rogue. “Normally, the way regulations are set up is a whole bunch of bad things happen, there’s a public outcry, and after many years, a regulatory agency is set up to regulate that industry,” Musk said during the same NGA talk. “it takes forever. That, in the past, has been bad, but not something which represented a fundamental risk to the existence of civilization.”

Hawking believes that a global governing body needs to regulate the development of AI to prevent a particular nation from becoming superior. Russian President Vladimir Putin recently stoked this fear at a meeting with Russian students in early September, when he said, “The one who becomes the leader in this sphere will be the ruler of the world.” These comments further emboldened Musk’s position — he tweeted that the race for AI superiority is the “most likely cause of WW3.”

Musk has taken steps to combat this perceived threat. He, along with startup guru Sam Altman, co-founded the non-profit OpenAI in order to guide AI development towards innovations that benefit all of humanity. According to the company’s mission statement: “By being at the forefront of the field, we can influence the conditions under which AGI is created.” Musk also founded a company called Neuralink intended to create a brain-computer interface. Linking the brain to a computer would, in theory, augment the brain’s processing power to keep pace with AI systems.

Other predictions are less optimistic. Seth Shostak, the senior astronomer at SETI believes that AI will succeed humans as the most intelligent entities on the planet. “The first generation [of AI] is just going to do what you tell them; however, by the third generation, then they will have their own agenda,” Shostak said in an interview with Futurism.

However, Shostak doesn’t believe sophisticated AI will end up enslaving the human race — instead, he predicts, humans will simply become immaterial to these hyper-intelligent machines. Shostak thinks that these machines will exist on an intellectual plane so far above humans that, at worst, we will be nothing more than a tolerable nuisance.

Fear Not

Not everyone believes the rise of AI will be detrimental to humans; some are convinced that the technology has the potential to make our lives better. “The so-called control problem that Elon is worried about isn’t something that people should feel is imminent. We shouldn’t panic about it,” Microsoft founder and philanthropist Bill Gates recently told the Wall Street Journal. Facebook’s Mark Zuckerberg went even further during a Facebook Live broadcast back in July, saying that Musk’s comments were “pretty irresponsible.” Zuckerberg is optimistic about what AI will enable us to accomplish and thinks that these unsubstantiated doomsday scenarios are nothing more than fear-mongering.

Some experts predict that AI could enhance our humanity. In 2010, Swiss neuroscientist Pascal Kaufmann founded Starmind, a company that plans to use self-learning algorithms to create a “superorganism” made of thousands of experts’ brains. “A lot of AI alarmists do not actually work in AI. [Their] fear goes back to that incorrect correlation between how computers work and how the brain functions,” Kaufmann told Futurism.

Kaufmann believes that this basic lack of understanding leads to predictions that may make good movies, but do not say anything about our future reality. “When we start comparing how the brain works to how computers work, we immediately go off track in tackling the principles of the brain,” he said. “We must first understand the concepts of how the brain works and then we can apply that knowledge to AI development.” A better understanding of our own brains would not only lead to AI sophisticated enough to rival human intelligence, but also to better brain-computer interfaces to enable a dialogue between the two.

To Kaufmann, AI, like many technological advances that came before, isn’t without risk. “There are dangers which come with the creation of such powerful and omniscient technology, just as there are dangers with anything that is powerful. This does not mean we should assume the worst and make potentially detrimental decisions now based on that fear,” he said.

Experts expressed similar concerns about quantum computers, and about lasers and nuclear weapons—applications for that technology can be both harmful and helpful.

Definite Disrupter

Predicting the future is a delicate game. We can only rely on our predictions of what we already have, and yet it’s impossible to rule anything out.

We don’t yet know whether AI will usher in a golden age of human existence, or if it will all end in the destruction of everything humans cherish. What is clear, though, is that thanks to AI, the world of the future could bear little resemblance to the one we inhabit today.

Comments